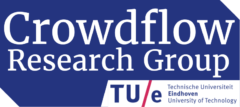

Moving Light is an unprecedented crowd-light interaction experiment in real-life performed during the Glow Lightfestival in Eindhoven (11-18 Nov. 2017).

When you visit @GlowEindhoven make sure not to miss out on ‘Moving Light’ @PhilipsLight @TheStudentHotel #4TU @TUeindhoven @AntalHaans @alecorbetta https://t.co/wGGG2yE6Sv pic.twitter.com/ZO0wlLFjCa

— CenterHT (@Center_HT) November 16, 2017

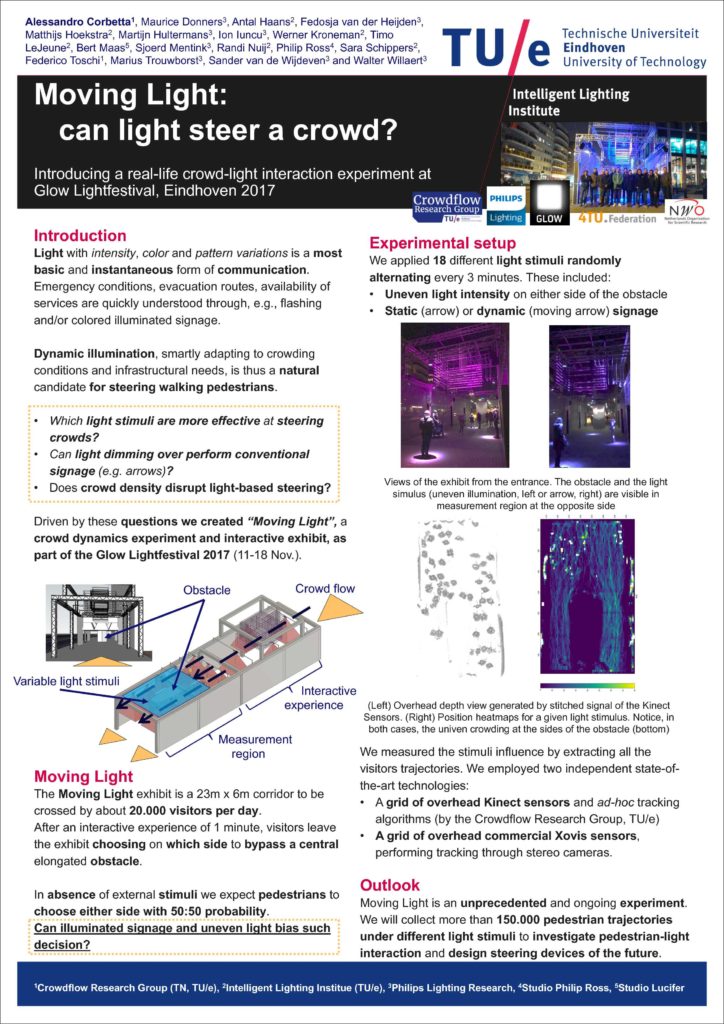

Introduction

Light with intensity, color and pattern variations is a most basic and instantaneous form of communication.

Emergency conditions, evacuation routes, availability of services are quickly understood through, e.g., flashing and/or colored illuminated signage.

Dynamic illumination, smartly adapting to crowding conditions and infrastructural needs, is thus a natural candidate for steering walking pedestrians.

- Which light stimuli are more effective at steering

crowds? - Can light dimming over perform conventional

signage (e.g. arrows)? - Does crowd density disrupt light-based steering?

Driven by these questions we created “Moving Light”, a crowd dynamics experiment and interactive exhibit, as part of the Glow Lightfestival 2017 (11-18 Nov.).

#movingLightGlow

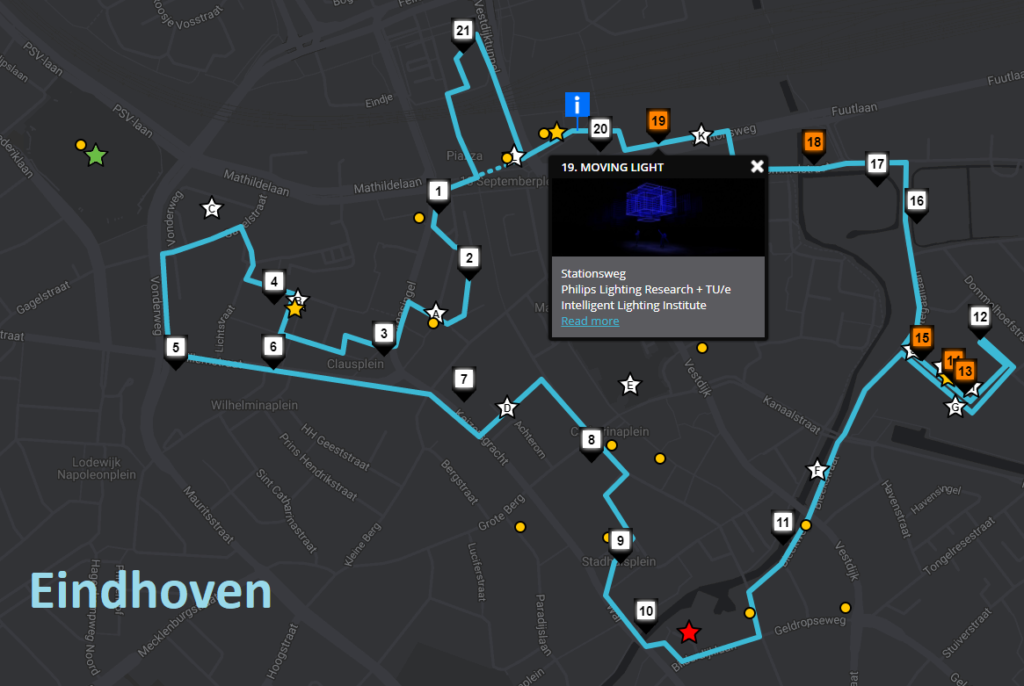

The Moving Light exhibit is a 23m x 6m corridor to be crossed by about 20.000 visitors per day. After an interactive experience of 1 minute, visitors leave the exhibit choosing on which side to bypass a central elongated obstacle.

In absence of external stimuli we expect pedestrians to

choose either side with 50:50 probability.

Can illuminated signage and uneven light sway such

decision?

We share the design and very preliminary results at the ILIAD 17 conference in Eindhoven with the poster below.

See high resolution version on ResearchGate or contact us.

Tweets about Moving Light (#movingLightGlow )

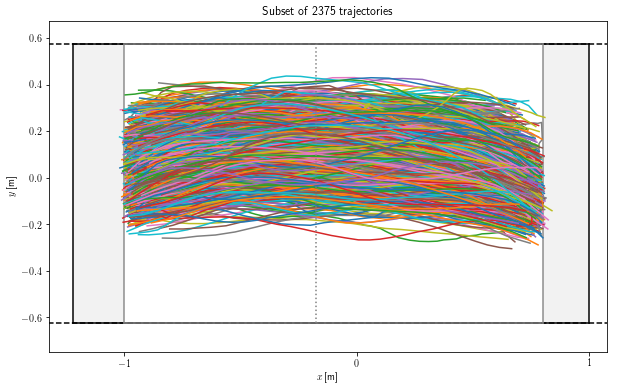

#movingLightGlow Kinect tracking is on! System on its way for the “Moving Light” exhibit / experiment at #glow pic.twitter.com/0aKGctrJlv

— Alessandro Corbetta (@alecorbetta) November 8, 2017

Check it out this Pioneering Research on #CrowdDynamics

Moving Light: can light steer a crowd?@alecorbetta keep us update!https://t.co/rc3niHDZfM pic.twitter.com/V9jm2TO9HY— Evacuation Modelling (@EvacuationModel) November 17, 2017

Building #movingLightGlow ! #GLOW pic.twitter.com/exTrBZVfxk

— Alessandro Corbetta (@alecorbetta) November 8, 2017

TU/e and Philips make GLOW-goers ‘complicit’ https://t.co/LEJeCa2NY7

— Antal Haans (@AntalHaans) November 5, 2017

The #movingLightGlow experiment is already on poster at the ILI outreach event! pic.twitter.com/YzRx08IKUM

— Alessandro Corbetta (@alecorbetta) November 14, 2017

About Moving Light

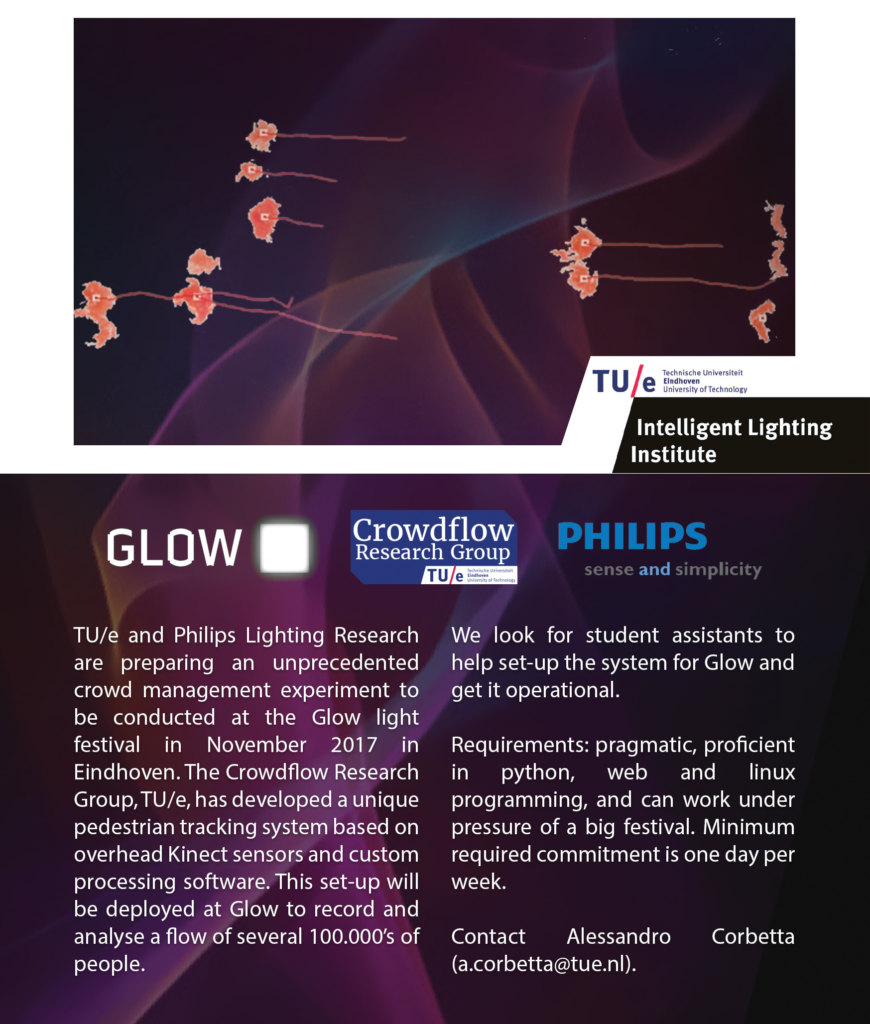

Moving Light has been created by Alessandro Corbetta1, Maurice Donners2, Antal Haans3, Fedosja van der Heijden2, Matthijs Hoekstra3, Martijn Hultermans2, Ion Iuncu2, Werner Kroneman1, Timo LeJeune2, Bert Maas4, Sjoerd Mentink2, Randi Nuij3, Philip Ross5, Sara Schippers3, Dragan Sekulovski2, Federico Toschi1, Marius Trouwborst2, Sander van de Wijdeven2 and Walter Willaert2

Crowdflow Research Group, TU/e, Dept. of Applied Physics

Crowdflow Research Group, TU/e, Dept. of Applied Physics-

Philips Lighting Research

Philips Lighting Research  Intelligent Lighting Institute, TU/e

Intelligent Lighting Institute, TU/e Studio Lucifer, Eindhoven

Studio Lucifer, Eindhoven Studio Philip Ross, Eindhoven

Studio Philip Ross, Eindhoven

Moving Light has been further supported by

![]()